Last week, I had the privilege of attending the 2014 AAAI Fall Symposium on Knowledge, Skill, and Behavior Transfer in Autonomous Robots. My own interest in this topic comes from my goal of being able to program a mobile robot by driving it around. I presented a paper describing an approach for doing this. It improves upon my previous work by automatically associating actions with the Growing Neural Gas nodes, rather than relying on human input for specifying an action for every node.

To demonstrate the versatility of what I had implemented, on the morning of the talk I drove my Lego Mindstorms EV3 robot (running leJOS) around part of my hotel room for a couple of minutes, teaching it to avoid obstacles. I then included in my presentation a nice video of the purely visual obstacle avoidance it had learned in this short time span.

Even more fun was the poster session. I had promised to bring the robot with me to the poster session at the end of my oral presentation. At the start of the poster session, I drove the robot around the poster area for about two or three minutes. I was careful to make sure I introduced it to numerous human legs, so that it would learn to avoid them. I then set it loose, and it demonstrated very nice visual obstacle avoidance for about the next hour or so. When learning, it hadn't seen any white sneakers, so it did run into a couple of people, but for the most part it did great!

I'm hoping to release the source code for my implementation once the semester ends and I have a chance to clean things up a bit.

There were some interesting trends in the presentations I saw. Several people, including Peter Stone and Stéphane Doncieux, presented work in which learning happened in simulation. Stone's work "closed the loop" by transferring the learned skills to a physical robot, and even learned from the physical robot to retrain the simulation. This was an aspect of transfer I hadn't really thought about very much.

Several other presenters, including Gabriel Barth-Maron, David Abel, Benjamin Rosman, and Manuela Veloso, were focused on Markov Decision Processes and reinforcement learning. That isn't really the focus of my current work, although I've explored it in the past and I may do so again in the future. Much of the presented work involved ways of extracting transferrable domain knowledge from an MDP policy that had been learned with a particular reward function. By transferring that knowledge to learning a policy for a new reward function but in the same domain, learning can be accelerated.

Finally, there were several presenters, including Tesca Fitzgerald, Andrea Thomaz, and Yiannis Demiris, who showcased work in demonstration learning. The first two are interested in humans demonstrating tasks for humanoid robots, by either showing start/stop states for arrangements of objects, or even by directly manipulating their arms. Of particular interest was Tesca Fitzgerald's efforts towards developing a spectrum of related tasks for which knowledge transfer is possible to varying degrees. Task knowledge that is not transferred is handled with a planner; she had a great video of a robot hitting a ping-pong ball to illustrate what she had in mind. Yiannis Demeris does a lot of work with assistive robotics for the disabled. He showed some fascinating work in which the robots learned to help their clients, but only to the degree that the clients wanted the help. He's also done some work with robots teaching humans various tasks. Particularly amusing were the humanoid robot dance instructors!

I received some great feedback on my research from Yiannis Demiris, Nathan Ratliff, Matteo Leonetti, Eric Eaton, Pooyan Fazli, Matthew Taylor, Gabriel Barth-Maron, David Abel, Benjamin Rosman, Tesca Fitzgerald, Bruce Johnson, Cynthia Matuszek, and Laurel Riek. I returned home with enough new ideas to keep me very busy for the next couple of years. Thanks again to everyone who helped make this a great symposium!

Observations and commentary on robotics, AI, machine learning, and computer science (and academic life) in general.

Friday, November 21, 2014

Sunday, November 9, 2014

Obtaining a tenure-track position in Computer Science at a liberal arts college

The essay Beyond Research-Teaching Divide has some good insights for applying for a tenure-track job at a liberal arts college. First, a concise overview as to what this type of career entails, which is certainly consistent with my experience at Hendrix College:

This last paragraph also rings true:

The faculty members of many small colleges enjoy robust support with reasonable expectations for research output. We teach eager, inquisitive students who respect the title of “professor” (even when they do call you by your first name), whose whip-smart input enriches research almost as much as engaging with graduate students can.The author then describes a valuable lesson learned:

I learned how to see small departments’ needs and gaps, thereby arming me to write directly to issues that did not necessarily announce themselves in job postings. Is a history department relying on its Latin Americanist to cover its Canadian history offerings? Mock up a syllabus that will lighten that load, and remark on it in your job letter. ... [W]hen small colleges hire, my experience shows that they hire people who have expertise their department lacks.While this specific example is not directly pertinent to applying for a computer science position, the general concept definitely is. Get to know the current faculty. Determine their interests and aptitudes. Look at what they publish and what they habitually teach. From there, try to show how you would strengthen their program. Typically, what a small department seeks is to increase its breadth. In your cover letter, talk about how you could contribute in this way.

This last paragraph also rings true:

[S]trong letters are those that help us see a potential future colleague in front of a classroom, sharing a coffee with one of our students, and seated around our department’s meeting table (yup, we fit around one table; it’s probably not the room-filled affair you may have attended in graduate school). The best letters tell us more than what you think; they help us feel why you care about sharing those ideas with undergraduates in a classroom as much as with peer scholars in journals and books. Such letters exude enthusiasm for teaching without getting mired down in tedious assignment examples; they indicate your ability to model the research process, or (better) how you actively involve undergraduates in your research agenda.Both of these last two excerpts are extremely helpful advice for writing a cover letter for a job at a small liberal arts college. Study the department's web pages, as well as the college's catalog information for the program. And be sure to convey your enthusiasm for teaching a variety of courses at all levels, especially outside your research area. Mentioning one or two areas of genuine interest beyond your specialty can definitely be helpful.

Thursday, October 30, 2014

(In)feasibility of self-driving cars; lessons from past masters

A recent article about the challenges involved with self-driving cars contains many valuable observations. First, the Google self-driving car depends upon maps with an astonishing level of detail:

So what should we be remembering from the past? The previous revolution in robotics was the invention of subsumption by Rodney Brooks. (This is what everyone was excited about back when I started graduate school.) Let's look at how Brooks described his goals:

Even though this pioneering work in subsumption is almost 30 years old, there are still useful lessons to be learned from it. I will be presenting a paper describing my recent work on learning subsumption behaviors from imitating human actions at the AAAI Fall Symposium on Knowledge, Skill, and Behavior Transfer in a few weeks. Something important I have learned over the years is that older research that does not conform to current fads can still be a source of cutting-edge ideas. In fact, when other researchers are clustering around a particular fad, revisiting older ideas can often help us see an opportunity to make a contribution that others are overlooking.

[T]he Google car was able to do so much more than its predecessors in large part because the company had the resources to do something no other robotic car research project ever could: develop an ingenious but extremely expensive mapping system. These maps contain the exact three-dimensional location of streetlights, stop signs, crosswalks, lane markings, and every other crucial aspect of a roadway.Creating these maps is not just a matter of adapting Google Maps to the task:

[B]efore [Google]'s vision for ubiquitous self-driving cars can be realized, all 4 million miles of U.S. public roads will be need to be mapped, plus driveways, off-road trails, and everywhere else you'd ever want to take the car. So far, only a few thousand miles of road have gotten the treatment, [...]. The company frequently says that its car has driven more than 700,000 miles safely, but those are the same few thousand mapped miles, driven over and over again.And there is this very crucial caveat:

Another problem with maps is that once you make them, you have to keep them up to date, a challenge Google says it hasn't yet started working on. Considering all the traffic signals, stop signs, lane markings, and crosswalks that get added or removed every day throughout the country, keeping a gigantic database of maps current is vastly difficult.Odd as it may seem in the constantly changing world of computing, it is important to remember the past. Every now and then, in any research community, a researcher develops an important new innovation that proves hugely influential, often eclipsing other valuable work that is being undertaken. For example, Sebastian Thrun, among others, has done phenomenally important work in probabilistic techniques for robot navigation. His work provides the conceptual foundation for the Google self-driving car. Much current robotics research is dedicated to extending and improving ideas he pioneered.

So what should we be remembering from the past? The previous revolution in robotics was the invention of subsumption by Rodney Brooks. (This is what everyone was excited about back when I started graduate school.) Let's look at how Brooks described his goals:

Consider one of the key requirements Brooks specifies for a competent Creature:I wish to build completely autonomous mobile agents that co-exist in the world with humans, and are seen by those humans as intelligent beings in their own right. I will call such agents Creatures.

In describing the subsumption approach, Brooks goes on to describe the role of representation in his scheme:A Creature should be robust with respect to its environment; minor changes in the properties of the world should not lead to total collapse of the Creature's behavior; rather one should expect only a gradual change in capabilities of the Creature as the environment changes more and more.

All of this is summarized in his famous conclusion:[I]ndividual layers extract only those aspects of the world which they find relevant-projections of a representation into a simple subspace, if you like. Changes in the fundamental structure of the world have less chance of being reflected in every one of those projections than they would have of showing up as a difficulty in matching some query to a central single world model.

Now, as I see it, the lesson from the Google self-driving car, read in light of the thought of Rodney Brooks, is that developing a high-fidelity representation is a symptom of our ongoing inability to develop a general artificial intelligence, in spite of the almost unthinkable level of resources that Google is throwing at this project. It is easy to be a critic from the outside, not experiencing what the engineers on the inside are seeing, but I can't help but wonder whether revisiting the ideas that Brooks introduced back in the 80s and 90s might be conceptually helpful in their endeavor.When we examine very simple level intelligence we find that explicit representations and models of the world simply get in the way. It turns out to be better to use the world as its own model.

Even though this pioneering work in subsumption is almost 30 years old, there are still useful lessons to be learned from it. I will be presenting a paper describing my recent work on learning subsumption behaviors from imitating human actions at the AAAI Fall Symposium on Knowledge, Skill, and Behavior Transfer in a few weeks. Something important I have learned over the years is that older research that does not conform to current fads can still be a source of cutting-edge ideas. In fact, when other researchers are clustering around a particular fad, revisiting older ideas can often help us see an opportunity to make a contribution that others are overlooking.

Friday, September 26, 2014

EV3 webcams; Lisp and Smalltalk

It's always interesting to read about ways in which one's work has had an impact on others. Here are a couple of recent examples that I am happy to have the opportunity to reference:

First, I'd like to thank Andy Shaw and the leJOS team for their support of my webcam work on the EV3. They were able to incorporate my work into the leJOS code base, for the benefit of anyone wishing to use the leJOS platform for computer vision on the EV3. It was great fun getting it working this past summer. It would not have been possible without the terrific infrastructure their team has put into place, as well as their hands-on assistance as my project proceeded in fits and starts.

This semester, I am teaching a course focusing on the use of computer vision as a key sensor in robot programming. In the process, I'm experimenting with different vision algorithms on the EV3 platform. After the end of the semester, I plan to post in some detail about what worked best for us.

Second, Malcolm McCrimmon, who several years ago was one of my students, has written a fascinating reflection about what makes both Lisp and Smalltalk such powerful languages. I'm a bit humbled to see that this reflection was prompted by an impromptu four-word answer I gave to one of his questions in class. I'm teaching the course again this semester, and I'm looking forward to incorporating some of his insights into our classroom examination of these languages.

First, I'd like to thank Andy Shaw and the leJOS team for their support of my webcam work on the EV3. They were able to incorporate my work into the leJOS code base, for the benefit of anyone wishing to use the leJOS platform for computer vision on the EV3. It was great fun getting it working this past summer. It would not have been possible without the terrific infrastructure their team has put into place, as well as their hands-on assistance as my project proceeded in fits and starts.

This semester, I am teaching a course focusing on the use of computer vision as a key sensor in robot programming. In the process, I'm experimenting with different vision algorithms on the EV3 platform. After the end of the semester, I plan to post in some detail about what worked best for us.

Second, Malcolm McCrimmon, who several years ago was one of my students, has written a fascinating reflection about what makes both Lisp and Smalltalk such powerful languages. I'm a bit humbled to see that this reflection was prompted by an impromptu four-word answer I gave to one of his questions in class. I'm teaching the course again this semester, and I'm looking forward to incorporating some of his insights into our classroom examination of these languages.

Friday, September 19, 2014

Lego EV3 Model for Computer Vision

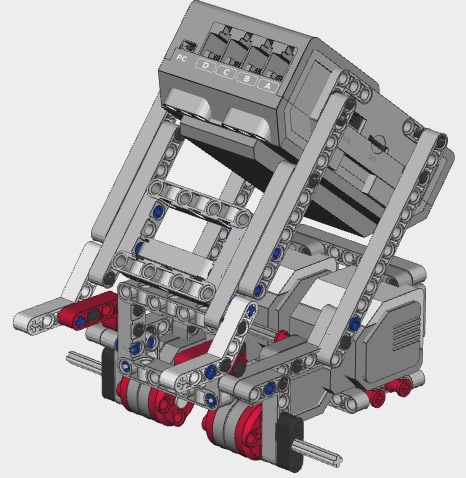

Using the EV3 platform for computer vision is not just a matter of plugging the camera into the USB port with a properly functioning driver in place. It is also important to get the right physical design for development and debugging.

I'd like to share a model I have designed to facilitate this. The LCD screen is rear-facing, at an angle, so as to allow a developer to see the images that the robot is acquiring as it navigates its environment.

The webcam can be inserted inside the open-center beam in the front of the robot. I positioned another 5-beam above the webcam to hold it in place a bit better. (That part is not included in these instructions, but you can see it in the photo.) Some of my students used rubber bands to hold it in more firmly. I used a Logitech C270 webcam.

I built it using the Lego Mindstorms EV3 Education Kit. To attach the wheels, follow the instructions on pages 22-25 and 32-33 of the instruction booklet that is included with that kit.

I do not know if the parts available in the regular kit are sufficient to build it. Feedback would be welcome from anyone who has a chance to try that out.

I created the CAD model using Bricksmith.

Without further ado, then, here is the model:

We start with the left motor:

I'd like to share a model I have designed to facilitate this. The LCD screen is rear-facing, at an angle, so as to allow a developer to see the images that the robot is acquiring as it navigates its environment.

The webcam can be inserted inside the open-center beam in the front of the robot. I positioned another 5-beam above the webcam to hold it in place a bit better. (That part is not included in these instructions, but you can see it in the photo.) Some of my students used rubber bands to hold it in more firmly. I used a Logitech C270 webcam.

I built it using the Lego Mindstorms EV3 Education Kit. To attach the wheels, follow the instructions on pages 22-25 and 32-33 of the instruction booklet that is included with that kit.

I do not know if the parts available in the regular kit are sufficient to build it. Feedback would be welcome from anyone who has a chance to try that out.

I created the CAD model using Bricksmith.

Without further ado, then, here is the model:

We start with the left motor:

Now we begin work on the right motor:

Monday, August 11, 2014

Brain-controlled user interfaces

Brain-controlled user interfaces are an important wave of the future. I think their most essential application is in making it possible for the physically disabled to interact with the world, but by no means do I think we should stop there.

To this end, I'd like to mention the OpenBCI initiative. They aim to create an open platform for brain-based computing interfaces. They are using Arduino as the basis for the system. So for about $500 you can get a brain-reading board. If this sort of thing is of interest to you I highly recommend checking it out.

Personally, I'd love to try this out as a means of remotely controlling and training a robot.

To this end, I'd like to mention the OpenBCI initiative. They aim to create an open platform for brain-based computing interfaces. They are using Arduino as the basis for the system. So for about $500 you can get a brain-reading board. If this sort of thing is of interest to you I highly recommend checking it out.

Personally, I'd love to try this out as a means of remotely controlling and training a robot.

Monday, August 4, 2014

Webcam with Lego Mindstorms EV3, part 3 (Java Demo)

Having rebuilt the kernel to include the Video4Linux2 drivers, and having written a JNI driver, we are now ready to write a Java program that demonstrates the webcam in action. We will create two classes to do this. The first class will represent the YUYV format image that was acquired. This class is designed to provide a layer of abstraction above the byte array returned by the grabFrame() method in Webcam.java. It also provides a display() method that will write a value to each corresponding pixel on the LCD display. Since the LCD is a monochrome display, we select the pixel display value based on whether the image pixel Y value is above or below the mean Y value across the image.

import java.io.IOException;

import lejos.hardware.lcd.LCD;

public class YUYVImage {

private byte[] pix;

private int width, height;

YUYVImage(byte[] pix, int width, int height) {

this.pix = pix;

this.width = width;

this.height = height;

}

public static YUYVImage grab() throws IOException {

return new YUYVImage(Webcam.grabFrame(), Webcam.getWidth(), Webcam.getHeight());

}

public int getNumPixels() {return pix.length / 2;}

public int getWidth() {return width;}

public int getHeight() {return height;}

public int getY(int x, int y) {

return getValueAt(2 * (y * width + x));

}

public int getU(int x, int y) {

return getValueAt(getPairBase(x, y) + 1);

}

public int getV(int x, int y) {

return getValueAt(getPairBase(x, y) + 3);

}

private int getValueAt(int index) {

return pix[index] & 0xFF;

}

private int getPairBase(int x, int y) {

return 2 * (y * width + (x - x % 2));

}

public int getMeanY() {

int total = 0;

for (int i = 0; i < pix.length; i += 2) {

total += pix[i];

}

return total / getNumPixels();

}

public void display() {

int mean = getMeanY();

for (int i = 0; i < pix.length; i += 2) {

int x = (i / 2) % width;

int y = (i / 2) / width;

LCD.setPixel(x, y, pix[i] > mean ? 1 : 0);

}

}

}

import java.io.IOException;

import lejos.hardware.Button;

import lejos.hardware.lcd.LCD;

public class CameraDemo {

public static void main(String[] args) {

try {

Webcam.start();

while (!Button.ESCAPE.isDown()) {

YUYVImage img = YUYVImage.grab();

img.display();

}

double fps = Webcam.end();

LCD.clear();

System.out.println(fps + " fps");

while (!Button.ESCAPE.isDown());

} catch (IOException ioe) {

ioe.printStackTrace();

System.out.println("Driver exception: " + ioe.getMessage());

}

}

}

Sunday, August 3, 2014

Webcam with Lego Mindstorms EV3, part 2 (Java Native Interface)

If you have succeeded in rebuilding the kernel to include the Video4Linux2 drivers, the next step is to make the webcam available from Java. The easiest way to do this is to use Java Native Interface (JNI) to write a driver in C that interfaces with Java. It is again essential to develop on a Linux PC to get this working.

All code included in this blog post is public domain, and may be freely reused without any restrictions. I want to emphasize that the code I am sharing here is more of a proof-of-concept than a rigorously built library. My intention is for this to be a starting point for anyone interested to create a library. (I might do so myself eventually.)

To start writing a JNI program, one must create a Java class with stubs to be written in C. The following Java program contains the stubs I used:

To compile the JNI C code, we need to use our cross-compiler to generate a shared library. Once this is done, we need to copy the shared library onto the EV3, specifically, into a directory where it will actually be found at runtime. The following commands were sufficient for achieving this:

At this point, it is convenient to have an easy test to make sure the system works. To that end, add the following main() method to Webcam.java. You should be able to run it just like any other LeJOS EV3 program. It will print a period for every frame it successfully grabs. Of course, make sure your webcam is plugged in before you try this!

Finally, we are ready to examine the JNI C code. To create this implementation, I adapted the Video4Linux2 example driver found at http://linuxtv.org/downloads/v4l-dvb-apis/capture-example.html. Here is an overview of my modifications to the driver:

All code included in this blog post is public domain, and may be freely reused without any restrictions. I want to emphasize that the code I am sharing here is more of a proof-of-concept than a rigorously built library. My intention is for this to be a starting point for anyone interested to create a library. (I might do so myself eventually.)

To start writing a JNI program, one must create a Java class with stubs to be written in C. The following Java program contains the stubs I used:

public class Webcam {

// Causes the native library to be loaded from the system.

static {System.loadLibrary("webcam");}

// Three primary methods for use by clients:

// Call start() before doing anything else.

public static void start(int w, int h) throws java.io.IOException {

width = w;

height = h;

setup();

start = System.currentTimeMillis();

frames = 0;

}

// Call grabFrame() every time you want to see a new image.

// For best results, call it frequently!

public static byte[] grabFrame() throws java.io.IOException {

byte[] result = makeBuffer();

grab(result);

frames += 1;

return result;

}

// Call end() when you are done grabbing frames.

public static double end() throws java.io.IOException {

duration = System.currentTimeMillis() - start;

dispose();

return 1000.0 * frames / duration;

}

// Three native method stubs, all private:

// Called by start() before any frames are grabbed.

private static native void setup() throws java.io.IOException;

// Called by grabFrame(), which creates a new buffer each time.

private static native void grab(byte[] img) throws java.io.IOException;

// Called by end() to clean things up.

private static native void dispose() throws java.io.IOException;

// Specified by the user, and retained for later reference.

private static int width, height;

// Record-keeping data to enable calculation of frame rates.

private static int frames;

private static long start, duration;

// Utility methods

public static int getBufferSize() {return width * height * 2;}

public static byte[] makeBuffer() {return new byte[getBufferSize()];}

public static void start() throws java.io.IOException {

start(160, 120);

}

public static int getWidth() {return width;}

public static int getHeight() {return height;}

}

#include <jni.h>

JNIEXPORT void JNICALL Java_Webcam_setup

(JNIEnv *, jclass);

JNIEXPORT void JNICALL Java_Webcam_grab

(JNIEnv *, jclass, jbyteArray);

JNIEXPORT void JNICALL Java_Webcam_dispose

(JNIEnv *, jclass);

To compile the JNI C code, we need to use our cross-compiler to generate a shared library. Once this is done, we need to copy the shared library onto the EV3, specifically, into a directory where it will actually be found at runtime. The following commands were sufficient for achieving this:

sudo apt-get install libv4l-dev ~/CodeSourcery/Sourcery_G++_Lite/bin/arm-none-linux-gnueabi-gcc

-shared -o libwebcam.so WebcamImp.c -fpic

-I/usr/lib/jvm/java-7-openjdk-i386/include -std=c99 -Wall

scp libwebcam.so root@10.0.1.1:/usr/lib

At this point, it is convenient to have an easy test to make sure the system works. To that end, add the following main() method to Webcam.java. You should be able to run it just like any other LeJOS EV3 program. It will print a period for every frame it successfully grabs. Of course, make sure your webcam is plugged in before you try this!

// At the top of the file

import lejos.hardware.Button;

// Add to the Webcam.java class

public static void main(String[] args) throws java.io.IOException {

int goal = 25;

start();

for (int i = 0; i < goal; ++i) {

grabFrame();

System.out.print(".");

}

System.out.println();

double fps = end();

System.out.println(fps + " frames/s");

while (!Button.ESCAPE.isDown());

}

Finally, we are ready to examine the JNI C code. To create this implementation, I adapted the Video4Linux2 example driver found at http://linuxtv.org/downloads/v4l-dvb-apis/capture-example.html. Here is an overview of my modifications to the driver:

- I reorganized the code to match the Webcam.java specification:

- Java_Webcam_setup() calls open_device(), init_device(), and start_capturing().

- The interior loop in mainloop() became the basis for the implementation of Java_Webcam_grab().

- Java_Webcam_dispose() calls stop_capturing(), uninit_device(), and close_device().

- The Webcam.java main() largely takes over the responsibilities of main() in the driver.

- I converted all error messages to throws of java.io.IOException.

- I added a global 200-char buffer to store the exception messages.

- I only used the code for memory-mapped buffers. This worked fine for my camera; there might exist cameras that do not support this option.

- I forced the image format to YUYV. For image processing purposes, having intensity information is valuable, and using this format provides it with a minimum of hassle.

- I allow the user to suggest the image dimensions, but I also send back the actual width/height values the driver and camera agreed upon.

- The first frame grab after starting the EV3 consistently times out when calling select(), but all subsequent grabs work fine. I added a timeout counter to the frame grabbing routine to avoid spurious exceptions while still checking for timeouts.

- It may not be beautiful, but in my experience it works reliably.

Without further ado, then, here is the code:

#include "Webcam.h"

#include <stdio.h>

#include <stdlib.h>

#include <string.h>

#include <assert.h>

#include <getopt.h> /* getopt_long() */

#include <fcntl.h> /* low-level i/o */

#include <unistd.h>

#include <errno.h>

#include <sys/stat.h>

#include <sys/types.h>

#include <sys/time.h>

#include <sys/mman.h>

#include <sys/ioctl.h>

#include <linux/videodev2.h>

#define REPORT_TIMEOUT 1

#define DEVICE "/dev/video0"

#define EXCEPTION_BUFFER_SIZE 200

#define MAX_TIMEOUTS 2

#define FORMAT V4L2_PIX_FMT_YUYV

#define CLEAR(x) memset(&(x), 0, sizeof(x))

struct buffer {

void *start;

size_t length;

};

static int fd = -1;

struct buffer *buffers;

static unsigned int n_buffers;

static char exceptionBuffer[EXCEPTION_BUFFER_SIZE];

// Place error message in exceptionBuffer

jint error_exit(JNIEnv *env) {

fprintf(stderr, "Throwing exception:\n");

fprintf(stderr, "%s\n", exceptionBuffer);

return (*env)->ThrowNew(env, (*env)->FindClass(env, "java/io/IOException"),

exceptionBuffer);

}

jint errno_exit(JNIEnv *env, const char *s) {

sprintf(exceptionBuffer, "%s error %d, %s", s, errno, strerror(errno));

return error_exit(env);

}

static int xioctl(int fh, int request, void *arg) {

int r;

do {

r = ioctl(fh, request, arg);

} while (-1 == r && EINTR == errno);

return r;

}

jboolean open_device(JNIEnv *env) {

struct stat st;

if (-1 == stat(DEVICE, &st)) {

sprintf(exceptionBuffer, "Cannot identify '%s': %d, %s\n", DEVICE, errno,

strerror(errno));

return error_exit(env);

}

if (!S_ISCHR(st.st_mode)) {

sprintf(exceptionBuffer, "%s is no device\n", DEVICE);

return error_exit(env);

}

fd = open(DEVICE, O_RDWR /* required */ | O_NONBLOCK, 0);

if (-1 == fd) {

sprintf(exceptionBuffer, "Cannot open '%s': %d, %s\n", DEVICE, errno,

strerror(errno));

return error_exit(env);

}

return -1;

}

jboolean init_device(JNIEnv * env, jclass cls) {

struct v4l2_capability cap;

struct v4l2_cropcap cropcap;

struct v4l2_crop crop;

struct v4l2_format fmt;

struct v4l2_requestbuffers req;

unsigned int min;

if (-1 == xioctl(fd, VIDIOC_QUERYCAP, &cap)) {

if (EINVAL == errno) {

sprintf(exceptionBuffer, "%s is no V4L2 device\n", DEVICE);

return error_exit(env);

} else {

return errno_exit(env, "VIDIOC_QUERYCAP");

}

}

if (!(cap.capabilities & V4L2_CAP_VIDEO_CAPTURE)) {

sprintf(exceptionBuffer, "%s is no video capture device\n", DEVICE);

return error_exit(env);

}

if (!(cap.capabilities & V4L2_CAP_STREAMING)) {

sprintf(exceptionBuffer, "%s does not support streaming i/o\n", DEVICE);

return error_exit(env);

}

/* Select video input, video standard and tune here. */

CLEAR(cropcap);

cropcap.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (0 == xioctl(fd, VIDIOC_CROPCAP, &cropcap)) {

crop.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

crop.c = cropcap.defrect; /* reset to default */

if (-1 == xioctl(fd, VIDIOC_S_CROP, &crop)) {

switch (errno) {

case EINVAL:

/* Cropping not supported. */

break;

default:

/* Errors ignored. */

break;

}

}

} else {

/* Errors ignored. */

}

jfieldID width_id = (*env)->GetStaticFieldID(env, cls, "width", "I");

jfieldID height_id = (*env)->GetStaticFieldID(env, cls, "height", "I");

if (NULL == width_id || NULL == height_id) {

sprintf(exceptionBuffer, "width or height not present in Webcam.java");

return error_exit(env);

}

CLEAR(fmt);

fmt.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

fmt.fmt.pix.width = (*env)->GetStaticIntField(env, cls, width_id);

fmt.fmt.pix.height = (*env)->GetStaticIntField(env, cls, height_id);

fmt.fmt.pix.pixelformat = FORMAT;

fmt.fmt.pix.field = V4L2_FIELD_INTERLACED;

if (-1 == xioctl(fd, VIDIOC_S_FMT, &fmt)) {

return errno_exit(env, "VIDIOC_S_FMT");

}

(*env)->SetStaticIntField(env, cls, width_id, fmt.fmt.pix.width);

(*env)->SetStaticIntField(env, cls, height_id, fmt.fmt.pix.height);

/* Buggy driver paranoia. */

min = fmt.fmt.pix.width * 2;

if (fmt.fmt.pix.bytesperline < min)

fmt.fmt.pix.bytesperline = min;

min = fmt.fmt.pix.bytesperline * fmt.fmt.pix.height;

if (fmt.fmt.pix.sizeimage < min)

fmt.fmt.pix.sizeimage = min;

CLEAR(req);

req.count = 4;

req.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

req.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_REQBUFS, &req)) {

if (EINVAL == errno) {

sprintf(exceptionBuffer, "%s does not support memory mapping\n", DEVICE);

return error_exit(env);

} else {

return errno_exit(env, "VIDIOC_REQBUFS");

}

}

if (req.count < 2) {

sprintf(exceptionBuffer, "Insufficient buffer memory on %s\n", DEVICE);

return error_exit(env);

}

buffers = calloc(req.count, sizeof(*buffers));

if (!buffers) {

sprintf(exceptionBuffer, "Out of memory\n");

return error_exit(env);

}

for (n_buffers = 0; n_buffers < req.count; ++n_buffers) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = n_buffers;

if (-1 == xioctl(fd, VIDIOC_QUERYBUF, &buf))

return errno_exit(env, "VIDIOC_QUERYBUF");

buffers[n_buffers].length = buf.length;

buffers[n_buffers].start =

mmap(NULL /* start anywhere */,

buf.length,

PROT_READ | PROT_WRITE /* required */,

MAP_SHARED /* recommended */,

fd, buf.m.offset);

if (MAP_FAILED == buffers[n_buffers].start) {

return errno_exit(env, "mmap");

}

}

return -1;

}

jboolean start_capturing(JNIEnv *env) {

unsigned int i;

enum v4l2_buf_type type;

for (i = 0; i < n_buffers; ++i) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

buf.index = i;

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf)) {

return errno_exit(env, "VIDIOC_QBUF");

}

}

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMON, &type)) {

return errno_exit(env, "VIDIOC_STREAMON");

}

return -1;

}

JNIEXPORT void JNICALL Java_Webcam_setup(JNIEnv *env, jclass cls) {

if (open_device(env)) {

if (init_device(env, cls)) {

start_capturing(env);

}

}

}

void process_image(const void *p, int size, JNIEnv * env,

jbyteArray img) {

(*env)->SetByteArrayRegion(env, img, 0, size, (jbyte*)p);

}

jboolean read_frame(JNIEnv* env, jbyteArray img) {

struct v4l2_buffer buf;

CLEAR(buf);

buf.type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

buf.memory = V4L2_MEMORY_MMAP;

if (-1 == xioctl(fd, VIDIOC_DQBUF, &buf)) {

switch (errno) {

case EAGAIN:

return 0;

case EIO:

/* Could ignore EIO, see spec. */

/* fall through */

default:

return errno_exit(env, "VIDIOC_DQBUF");

}

}

assert(buf.index < n_buffers);

process_image(buffers[buf.index].start, buf.bytesused, env, img);

if (-1 == xioctl(fd, VIDIOC_QBUF, &buf)) {

return errno_exit(env, "VIDIOC_QBUF");

}

return -1;

}

JNIEXPORT void JNICALL Java_Webcam_grab(JNIEnv * env, jclass cls,

jbyteArray img) {

int timeouts = 0;

for (;;) {

fd_set fds;

struct timeval tv;

int r;

FD_ZERO(&fds);

FD_SET(fd, &fds);

/* Timeout. */

tv.tv_sec = 2;

tv.tv_usec = 0;

r = select(fd + 1, &fds, NULL, NULL, &tv);

if (-1 == r) {

if (EINTR != errno)

continue;

else {

errno_exit(env, "select");

return;

}

}

if (0 == r) {

timeouts++;

#ifdef REPORT_TIMEOUT

fprintf(stderr, "timeout %d (out of %d)\nTrying again", timeouts,

MAX_TIMEOUTS);

#endif

if (timeouts > MAX_TIMEOUTS) {

sprintf(exceptionBuffer, "select timeout\n");

error_exit(env);

return;

}

}

if (read_frame(env, img))

break;

/* EAGAIN - continue select loop. */

}

}

void stop_capturing(JNIEnv *env) {

enum v4l2_buf_type type;

type = V4L2_BUF_TYPE_VIDEO_CAPTURE;

if (-1 == xioctl(fd, VIDIOC_STREAMOFF, &type))

errno_exit(env, "VIDIOC_STREAMOFF");

}

void uninit_device(JNIEnv *env) {

unsigned int i;

for (i = 0; i < n_buffers; ++i)

if (-1 == munmap(buffers[i].start, buffers[i].length))

errno_exit(env, "munmap");

free(buffers);

}

void close_device(JNIEnv *env) {

if (-1 == close(fd))

errno_exit(env, "close");

fd = -1;

}

JNIEXPORT void JNICALL Java_Webcam_dispose(JNIEnv *env, jclass cls) {

stop_capturing(env);

uninit_device(env);

close_device(env);

}

Saturday, August 2, 2014

Webcam with Lego Mindstorms EV3, part 1 (kernel)

UPDATE:

The EV3 implementation of LeJOS now incorporates these changes. I highly recommend that you use LeJOS for your EV3 programming if you're a Java programmer interested in using a webcam. I have another post giving an example of using the webcam with LeJOS. If you're interested in using another system, this post may still be relevant for your purposes.

ORIGINAL POST:

Using a webcam with the Lego Mindstorms EV3 requires recompiling the kernel to include the device driver. This requires acquiring the following hardware and software:

I recommend using a UVC-compatible webcam, as all of them share the same kernel driver; a GSPCA-compatible webcam might also work, but you have to make sure its driver is included in the kernel. I personally have been using the Logitech C270. As the EV3 has a USB 1.1 port, make sure any webcam you employ is USB 1.1 compatible as well. A USB 2.0-only webcam probably won't work.

Cross-Compilation

I read somewhere that the case-insensitive file systems of both Windows and Mac machines can cause problems for cross-compiling the kernel, so I used a Linux machine. If you do not have one available, you can probably set up a Linux partition of your Windows or Mac machine.

We need to use a very specific cross-compiler. Go to http://go.mentor.com/2ig4q and download Sourcery G++ Lite 2009q1-203. I set mine up in a subdirectory off of my home directory (~/CodeSourcery), which I believe is what the build scripts will be expecting.

You'll need to install a couple of modules on your Linux machine for the cross-compilation to work:

Kernel Sources

I am using the LeJOS EV3 version of the kernel. They modified it significantly. One really cool modification is that the EV3 is network-visible (IP address 10.0.1.1) when plugged into your computer. You can open a shell, copy files, etc. Before trying to recompile and set up the kernel, it is important to download and set up the basic EV3 LeJOS system on a MicroSD card. We'll be copying the modified kernel over the distributed kernel, but everything else will remain the same.

To get a copy of the kernel sources from github, run the following line in your shell in the directory you'd like to put it. Then run the next line to get to the pertinent subdirectory:

The build_kernel.sh script is what compiles the kernel. The uImage file that it generates is the kernel image employed when booting. I would encourage you to run build_kernel.sh before going any further, to make sure everything you need is in place.

The first time I tried it, I got the following cryptic error message:

I resolved the error as follows:

Once you are able to rebuild the kernel, you are ready to activate the device drivers. To do this, you will need to edit LEGOBoard.config. For each option, you can specify "=n" to omit it, "=y" to compile it into the kernel, or "=m" to tell the kernel to look for it as an external module as needed. For all options to include, be sure to use "=y"; using "=m" requires compiling the modules separately, a needless hassle in this context.

Here is a comprehensive list of changes I made to LEGOBoard.config.

This first set of flags is sufficient to get it working with a UVC webcam such as my Logitech C270 (see http://www.ideasonboard.org/uvc/ for a list):

Once I had the above settings working with my webcam, I recompiled it again with the following additional settings that, I am guessing, should suffice for most webcams out there:

Finally, in order to avoid tediously answering "no" when recompiling, I added the following as well. Many of these were deprecated drivers; others had nothing to do with webcams as far as I could tell:

Having recompiled the kernel with these options, all that remains is to copy over the uImage file on the SD card with the uImage that you have just recompiled. To test whether it works, open a shell on the EV3 and see if /dev/video0 appears when you plug in the webcam. If it does, you have succeeded!

The EV3 implementation of LeJOS now incorporates these changes. I highly recommend that you use LeJOS for your EV3 programming if you're a Java programmer interested in using a webcam. I have another post giving an example of using the webcam with LeJOS. If you're interested in using another system, this post may still be relevant for your purposes.

ORIGINAL POST:

Using a webcam with the Lego Mindstorms EV3 requires recompiling the kernel to include the device driver. This requires acquiring the following hardware and software:

- An appropriate webcam

- A cross-compiler

- As far as I know, this will only work properly on a PC running Linux.

- EV3 kernel sources

I recommend using a UVC-compatible webcam, as all of them share the same kernel driver; a GSPCA-compatible webcam might also work, but you have to make sure its driver is included in the kernel. I personally have been using the Logitech C270. As the EV3 has a USB 1.1 port, make sure any webcam you employ is USB 1.1 compatible as well. A USB 2.0-only webcam probably won't work.

Cross-Compilation

I read somewhere that the case-insensitive file systems of both Windows and Mac machines can cause problems for cross-compiling the kernel, so I used a Linux machine. If you do not have one available, you can probably set up a Linux partition of your Windows or Mac machine.

We need to use a very specific cross-compiler. Go to http://go.mentor.com/2ig4q and download Sourcery G++ Lite 2009q1-203. I set mine up in a subdirectory off of my home directory (~/CodeSourcery), which I believe is what the build scripts will be expecting.

You'll need to install a couple of modules on your Linux machine for the cross-compilation to work:

Kernel Sources

I am using the LeJOS EV3 version of the kernel. They modified it significantly. One really cool modification is that the EV3 is network-visible (IP address 10.0.1.1) when plugged into your computer. You can open a shell, copy files, etc. Before trying to recompile and set up the kernel, it is important to download and set up the basic EV3 LeJOS system on a MicroSD card. We'll be copying the modified kernel over the distributed kernel, but everything else will remain the same.

To get a copy of the kernel sources from github, run the following line in your shell in the directory you'd like to put it. Then run the next line to get to the pertinent subdirectory:

The build_kernel.sh script is what compiles the kernel. The uImage file that it generates is the kernel image employed when booting. I would encourage you to run build_kernel.sh before going any further, to make sure everything you need is in place.

The first time I tried it, I got the following cryptic error message:

I resolved the error as follows:

Once you are able to rebuild the kernel, you are ready to activate the device drivers. To do this, you will need to edit LEGOBoard.config. For each option, you can specify "=n" to omit it, "=y" to compile it into the kernel, or "=m" to tell the kernel to look for it as an external module as needed. For all options to include, be sure to use "=y"; using "=m" requires compiling the modules separately, a needless hassle in this context.

Here is a comprehensive list of changes I made to LEGOBoard.config.

This first set of flags is sufficient to get it working with a UVC webcam such as my Logitech C270 (see http://www.ideasonboard.org/uvc/ for a list):

- Code:

CONFIG_MEDIA_SUPPORT=y

CONFIG_MEDIA_CAMERA_SUPPORT=y

CONFIG_MEDIA_USB_SUPPORT=y

CONFIG_USB_VIDEO_CLASS=y

CONFIG_USB_VIDEO_CLASS_INPUT_EVDEV=y

CONFIG_VIDEO_DEV=y

CONFIG_VIDEO_CAPTURE_DRIVERS=y

CONFIG_V4L_USB_DRIVERS=y

CONFIG_VIDEO_HELPER_CHIPS_AUTO=y

CONFIG_VIDEO_VPSS_SYSTEM=y

CONFIG_VIDEO_VPFE_CAPTURE=y

CONFIG_USB_PWC_INPUT_EVDEV=y

CONFIG_VIDEO_ADV_DEBUG=y

CONFIG_VIDEO_ALLOW_V4L1=y

CONFIG_VIDEO_V4L1_COMPAT=y

Once I had the above settings working with my webcam, I recompiled it again with the following additional settings that, I am guessing, should suffice for most webcams out there:

- Code:

CONFIG_USB_ZR364XX=y

CONFIG_USB_STKWEBCAM=y

CONFIG_USB_S2255=y

CONFIG_USB_GSPCA=y

CONFIG_USB_M5602=y

CONFIG_USB_STV06XX=y

CONFIG_USB_GL860=y

CONFIG_USB_GSPCA_CONEX=y

CONFIG_USB_GSPCA_ETOMS=y

CONFIG_USB_GSPCA_FINEPIX=y

CONFIG_USB_GSPCA_JEILINJ=y

CONFIG_USB_GSPCA_MARS=y

CONFIG_USB_GSPCA_MR97310A=y

CONFIG_USB_GSPCA_OV519=y

CONFIG_USB_GSPCA_OV534=y

CONFIG_USB_GSPCA_PAC207=y

CONFIG_USB_GSPCA_PAC7302=y

CONFIG_USB_GSPCA_PAC7311=y

CONFIG_USB_GSPCA_SN9C20X=y

CONFIG_USB_GSPCA_SN9C20X_EVDEV=y

CONFIG_USB_GSPCA_SONIXB=y

CONFIG_USB_GSPCA_SONIXJ=y

CONFIG_USB_GSPCA_SPCA500=y

CONFIG_USB_GSPCA_SPCA501=y

CONFIG_USB_GSPCA_SPCA505=y

CONFIG_USB_GSPCA_SPCA506=y

CONFIG_USB_GSPCA_SPCA508=y

CONFIG_USB_GSPCA_SPCA561=y

CONFIG_USB_GSPCA_SQ905=y

CONFIG_USB_GSPCA_SQ905C=y

CONFIG_USB_GSPCA_STK014=y

CONFIG_USB_GSPCA_STV0680=y

CONFIG_USB_GSPCA_SUNPLUS=y

CONFIG_USB_GSPCA_T613=y

CONFIG_USB_GSPCA_TV8532=y

CONFIG_USB_GSPCA_VC032X=y

CONFIG_USB_GSPCA_ZC3XX=y

CONFIG_USB_KONICAWC=y

CONFIG_USB_SE401=y

CONFIG_USB_SN9C102=y

CONFIG_USB_ZC0301=y

CONFIG_USB_PWC=y

CONFIG_USB_PWC_DEBUG=y

Finally, in order to avoid tediously answering "no" when recompiling, I added the following as well. Many of these were deprecated drivers; others had nothing to do with webcams as far as I could tell:

- Code:

CONFIG_DVB_CORE=n

CONFIG_MEDIA_ATTACH=n

CONFIG_MEDIA_TUNER_CUSTOMISE=n

CONFIG_VIDEO_FIXED_MINOR_RANGES=n

CONFIG_VIDEO_DAVINCI_VPIF_DISPLAY=n

CONFIG_VIDEO_DAVINCI_VPIF_CAPTURE=n

CONFIG_VIDEO_VIVI=n

CONFIG_VIDEO_CPIA=n

CONFIG_VIDEO_CPIA2=n

CONFIG_VIDEO_SAA5246A=n

CONFIG_VIDEO_SAA5249=n

CONFIG_SOC_CAMERA=n

CONFIG_VIDEO_PVRUSB2=n

CONFIG_VIDEO_HDPVR=n

CONFIG_VIDEO_EM28XX=n

CONFIG_VIDEO_CX231XX=n

CONFIG_VIDEO_USBVISION=n

CONFIG_USB_VICAM=n

CONFIG_USB_IBMCAM=n

CONFIG_USB_QUICKCAM_MESSENGER=n

CONFIG_USB_ET61X251=n

CONFIG_VIDEO_OVCAMCHIP=n

CONFIG_USB_OV511=n

CONFIG_USB_STV680=n

CONFIG_RADIO_ADAPTERS=n

CONFIG_DAB=n

Having recompiled the kernel with these options, all that remains is to copy over the uImage file on the SD card with the uImage that you have just recompiled. To test whether it works, open a shell on the EV3 and see if /dev/video0 appears when you plug in the webcam. If it does, you have succeeded!

Lego Mindstorms, Past and Future

I've been a longtime user of the Lego Mindstorms robots. I have used them primarily for teaching the Hendrix College course Robotics Explorations Studio. I also have used them in some of my published work. I employed the original Mindstorms RCX for several years. In spite of its limitations (32K RAM, 3-character output screen, limited sensors), it was great fun to use. It was powerful enough to run LeJOS, a port of the Java virtual machine. In general, Lego has done a great job of making the software and hardware of the Mindstorms robots sufficiently open to enable developers to create their own operating systems to meet their needs, and the LeJOS series has been a great example of this.

Starting in 2008, we transitioned to Lego Mindstorms NXT. I created a lab manual for the course employing the NXT and the pblua language. It was, overall, a dramatic improvement to the product that opened up many new possibilities. Significant improvements incorporated into the NXT included:

Starting in 2008, we transitioned to Lego Mindstorms NXT. I created a lab manual for the course employing the NXT and the pblua language. It was, overall, a dramatic improvement to the product that opened up many new possibilities. Significant improvements incorporated into the NXT included:

- A fourth sensor port.

- Rotation sensors incorporated into the motors.

- A distance sensor (ultrasonic).

- Many new third-party sensors (e.g. compass, gyroscope)

- 64K RAM.

- A rechargeable battery.

- A 100x60 pixel LCD output.

- A USB connection for uploading programs.

- A 32 bit ARM7 CPU.

The most recent incarnation is Lego Mindstorms EV3. Superficially, it might not appear to be as large an improvement as the RCX to NXT transition. The LCD screen is slightly larger (178x128 pixels), the kit includes some new types of sensors and motors, and it has a fourth motor port (to match the four sensor ports).

But under the surface, the changes are again revolutionary:

- 64 megabytes of RAM (i.e., three orders of magnitude more!)

- The ARM9 CPU is six times faster (300 MHz).

- Its operating system kernel is a version of Linux.

- It has a micro-SD slot (allowing for up to 32 gigabytes of persistent storage).

- It has a USB port.

I have always been interested in using image processing as a robotic sensor. Up until now, I have been placing a netbook atop a specially-designed NXT model, and using the netbook's webcam. But the USB port means that a webcam can now be plugged directly into a Mindstorms robot, with image processing taking place as part of the EV3 program.

As it happens, it is easier to observe that this is a possibility than to implement it.

Due to my familiarity with Java, and the maturity of the LeJOS project, I've decided to try to make this work using the EV3 version of LeJOS. This requires the following steps:

- Compile the USB webcam device drivers into the kernel.

- Write a Java Native Interface driver to access the webcam from Java.

- Write a Java program that calls the JNI driver to employ the webcam.

In writing the above posts, I learned that the Blogger interface provides no obvious means for formatting source code. A very convenient page that generates the formatted code is http://codeformatter.blogspot.com/.

I've put together a short demo that you can watch of the EV3 LCD screen showing video in real-time.

I've put together a short demo that you can watch of the EV3 LCD screen showing video in real-time.

Monday, July 21, 2014

Essays about the MOOC phenomenon

About a year ago, I participated in a project by the Associated Colleges of the South to explore the implications of MOOCs for liberal arts colleges. I signed up for (and completed) a Udacity course as part of this project. I wrote seven posts for a group blog about my observations and experiences. Here is a list of links to my posts on the topic, in chronological order:

Saturday, May 24, 2014

The Unlock Project

When reading the comments under an "Ask Slashdot" story about a reader describing a family member with locked-in syndrome, I learned about the Unlock Project. In their FAQ, they mention that they are seeking volunteer programmers to write Python code for the project. It looks like it would be a great opportunity to contribute for those who have the skills and spare time.

Thursday, May 15, 2014

Robot cars, utilitarian ethics, and free software

Robot cars could very well be programmed to kill their passengers under certain circumstances, namely, when doing so would save a larger number of lives. In other words, they would be programmed to be utilitarians.

The idea that the end justifies the means has been heavily criticized as the basis for a general moral theory. Given the wide variety of viewpoints on the matter, it seems to me that the only sensible approach is to ensure that passengers of robot cars have full control over the software that runs them. The free software movement has been highly successful in their endeavor of giving users control over desktop PCs. The movement is working towards the same goals with regard to smartphones.

For the sake of safety and giving users control over their environment, extending the free software movement to robots of all kinds may well be a moral imperative.

The idea that the end justifies the means has been heavily criticized as the basis for a general moral theory. Given the wide variety of viewpoints on the matter, it seems to me that the only sensible approach is to ensure that passengers of robot cars have full control over the software that runs them. The free software movement has been highly successful in their endeavor of giving users control over desktop PCs. The movement is working towards the same goals with regard to smartphones.

For the sake of safety and giving users control over their environment, extending the free software movement to robots of all kinds may well be a moral imperative.

Thursday, May 8, 2014

MAICS-2014

A couple of weeks ago, I had the privilege of attending MAICS 2014 in Spokane, Washington. It was my first visit to the state, and Spokane is a beautiful city.

I presented the work described in my paper "Creating Visual Reactive Robot Behaviors Using Growing Neural Gas". It was well-received, and I got some useful and interesting feedback during the question period. One particularly noteworthy observation was that I could consider incorporating color into the GNG nodes. In my current implementation, I strictly use intensity values. Creating an HSI-based GNG node should be straightforward. I'm looking forward to experimenting with this approach when I get a chance. (For more details, including videos, check out a previous blog post describing my work.)

I enjoyed most of the paper presentations. Here are some themes I identified in this set of papers and presentations:

Every time I teach my artificial intelligence course, I cover several different supervised learning algorithms. A consequence of this is that inevitably several students become interested in methods for combining classifiers. Joe Dumoulin of NextIT Corporation presented an interesting method for doing exactly that. If future students wish to experiment with this concept, I'll point them towards this paper.

I have always been most interested in exploring the idea of an intelligent agent through mobile robotics. The presentation by Chayan Chakrabarti and George Luger persuaded me that I need to pay some attention to advances in interactive chat systems. I plan to investigate how I might create a programming project that is simple enough to be completed within a week or two but sophisticated enough to include concepts akin to those described in that paper.

Syoji Kobashi presented some excellent work he had done in automated detection of candidate sites for brain aneurysms. His system builds a 3D model of cerebral blood vessels, and then does some pattern matching to identify candidate trouble spots. It was a great example of how sheer hard work and persistence with a difficult problem can pay off with excellent results.

Yuki and Noriaki Nakagawa gave a live demonstration of a new robot arm they have designed. Their insight is that all too frequently the utility of robots is limited by the perception (and reality) that it is too dangerous for humans to touch them. To ameliorate this, they are developing robots that can interact safely with humans, even to the extent of tactile interaction. The arm they demonstrated featured a very clever design. The gripper is effective but physically incapable of harming a human by crushing. I enjoyed the opportunity to physically interact with the device, and I look forward to seeing what other innovations their company produces. (You can see me arm-wrestling the device in the photo below.)

I'd like to thank Paul DePalma and Atsushi Inoue for all of their hard work putting the conference together. I am looking forward to attending MAICS-2015 in Greensboro, North Carolina.

I presented the work described in my paper "Creating Visual Reactive Robot Behaviors Using Growing Neural Gas". It was well-received, and I got some useful and interesting feedback during the question period. One particularly noteworthy observation was that I could consider incorporating color into the GNG nodes. In my current implementation, I strictly use intensity values. Creating an HSI-based GNG node should be straightforward. I'm looking forward to experimenting with this approach when I get a chance. (For more details, including videos, check out a previous blog post describing my work.)

I enjoyed most of the paper presentations. Here are some themes I identified in this set of papers and presentations:

- Fuzzy logic remains a go-to technique for bridging natural language and numerically-defined problem spaces.

- The deceptively simple naive Bayes classifier retains a strong following.

- Much progress has been made in creating interactive chat systems, an area to which I have not been paying much attention.

Every time I teach my artificial intelligence course, I cover several different supervised learning algorithms. A consequence of this is that inevitably several students become interested in methods for combining classifiers. Joe Dumoulin of NextIT Corporation presented an interesting method for doing exactly that. If future students wish to experiment with this concept, I'll point them towards this paper.

I have always been most interested in exploring the idea of an intelligent agent through mobile robotics. The presentation by Chayan Chakrabarti and George Luger persuaded me that I need to pay some attention to advances in interactive chat systems. I plan to investigate how I might create a programming project that is simple enough to be completed within a week or two but sophisticated enough to include concepts akin to those described in that paper.

Syoji Kobashi presented some excellent work he had done in automated detection of candidate sites for brain aneurysms. His system builds a 3D model of cerebral blood vessels, and then does some pattern matching to identify candidate trouble spots. It was a great example of how sheer hard work and persistence with a difficult problem can pay off with excellent results.

Yuki and Noriaki Nakagawa gave a live demonstration of a new robot arm they have designed. Their insight is that all too frequently the utility of robots is limited by the perception (and reality) that it is too dangerous for humans to touch them. To ameliorate this, they are developing robots that can interact safely with humans, even to the extent of tactile interaction. The arm they demonstrated featured a very clever design. The gripper is effective but physically incapable of harming a human by crushing. I enjoyed the opportunity to physically interact with the device, and I look forward to seeing what other innovations their company produces. (You can see me arm-wrestling the device in the photo below.)

I'd like to thank Paul DePalma and Atsushi Inoue for all of their hard work putting the conference together. I am looking forward to attending MAICS-2015 in Greensboro, North Carolina.

Friday, May 2, 2014

Show, don't tell your robot what you want

It would be valuable to get a robot to do what you want without you having to program it. If you could just show it what you want, and have it understand that, much programming effort could be saved.

I've recently taken a first step towards creating such a system. It is very much a prototype at the moment. You can read my recent paper for the details. Here is an outline of the basic idea:

I've recently taken a first step towards creating such a system. It is very much a prototype at the moment. You can read my recent paper for the details. Here is an outline of the basic idea:

- Drive a camera-equipped robot through a target area.

- Have the robot build a Growing Neural Gas network of the images it acquires as it drives around.

- Growing Neural Gas is an unsupervised learning algorithm.

- It generates clusters of its inputs using a distance metric.

- It is closely related to k-means and the Kohonen Self-Organizing Map.

- The number of clusters is determined by the algorithm.

- For each cluster, select a desired action.

- Set the robot free.

- As it acquires each image, it determines the matching cluster.

- It then executes the action for that cluster.

Here is a screenshot of the "programming" interface. This example was from a 26-node GNG. Each cluster has a representative image. The action is selected from a drop-down menu. For these first experiments, we are training the robot on an obstacle-avoidance task. The goal is to maximize forward motion while avoiding obstacles.

Needless to say, the system is not perfect at this stage. The biggest problem is that some of the clusters are host to ambiguous actions. So sometimes the robot reacts to "ghosts", and other times it hits obstacles. Going forward, a major research focus will be to find a way to automate additional growing of the network to compensate for this problem.

I'd like to share two videos of the robot in action. In the first video, the robot does a good job of avoiding obstacles until it gets stuck under a table:

In the second video, the robot sees a number of ghosts, but for the most part it maintains good forward motion for over five minutes:

Idealistic students and the job market

In class on Tuesday, during a discussion of computing ethics, the students were of the opinion that it is unethical for companies to require that they sign away all intellectual property rights for the duration of their employment. They were also speculating as to whether it is unethical for them to even agree to work for such a company.

Such clauses are common, of course, because programming jobs do not lend themselves to being defined in terms of "hours worked". Since usable work can be (and is) produced at all hours of the day or night, it makes sense from the company's point of view to claim ownership of all ideas produced by the employee.

It was interesting to juxtapose this discussion with an experience I had at a conference (MAICS 2014) the previous weekend. Two research presentations were given by employees of a company, NextIT, which develops interactive customer-service systems for its clients. Now, I do not know if NextIT maintains an intellectual-property clause in its employee contracts, but it was notable that its employees were presenting the technical details of innovations they had created in order to make their systems work better, with the encouragement of their employer.

This suggests that disclosure of ideas is not necessarily the commercial-suicide scenario businesses seem to fear. Removing such clauses may well attract stronger employees with a higher sense of goodwill without really doing any damage to the company's business plans. It would be very much worth examining whether the harm done by such clauses exceeds their (alleged) benefits.

Such clauses are common, of course, because programming jobs do not lend themselves to being defined in terms of "hours worked". Since usable work can be (and is) produced at all hours of the day or night, it makes sense from the company's point of view to claim ownership of all ideas produced by the employee.

It was interesting to juxtapose this discussion with an experience I had at a conference (MAICS 2014) the previous weekend. Two research presentations were given by employees of a company, NextIT, which develops interactive customer-service systems for its clients. Now, I do not know if NextIT maintains an intellectual-property clause in its employee contracts, but it was notable that its employees were presenting the technical details of innovations they had created in order to make their systems work better, with the encouragement of their employer.

This suggests that disclosure of ideas is not necessarily the commercial-suicide scenario businesses seem to fear. Removing such clauses may well attract stronger employees with a higher sense of goodwill without really doing any damage to the company's business plans. It would be very much worth examining whether the harm done by such clauses exceeds their (alleged) benefits.

Sunday, April 27, 2014

Welcome!

Welcome to my blog! My name is Gabriel Ferrer, and I am an Associate Professor of Computer Science at Hendrix College in Conway, Arkansas.

I have titled this blog "Computing Intelligently". As I have a strong interest in Artificial Intelligence, Machine Learning, Robotics, and related technologies, I hope to provide informed commentary on developments in those areas. My interests in computer science are broad, though, so whatever subject in computing happens to be the object of my commentary, I aspire to do so intelligently.

I do not plan to post on a strict calendar; rather, it is my aspiration to post whenever I feel I have something worthwhile to say. Feel free to subscribe to my RSS feed if you would like to be informed of updates.

I have titled this blog "Computing Intelligently". As I have a strong interest in Artificial Intelligence, Machine Learning, Robotics, and related technologies, I hope to provide informed commentary on developments in those areas. My interests in computer science are broad, though, so whatever subject in computing happens to be the object of my commentary, I aspire to do so intelligently.

I do not plan to post on a strict calendar; rather, it is my aspiration to post whenever I feel I have something worthwhile to say. Feel free to subscribe to my RSS feed if you would like to be informed of updates.

Subscribe to:

Comments (Atom)